3.5 部署 prometheus + granfa 监控服务

安装方式支持 kube-prometheus 安装和 helm,这里介绍 kube-prometheus 安装

注:使用

kube-prometheus安装的话,前面的prometheus+grafana+metrics-server都不用安装,已经集成在里面

3.5.1 安装 kube-prometheus

下载代码

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.10.0.tar.gz \

-O kube-prometheus_v0.10.0.tar.gz

tar xf kube-prometheus_v0.10.0.tar.gz

cd kube-prometheus-0.10.0

修改 yaml 清单文件

修改 grafana-service

cp manifests/grafana-service.yaml{,.ori}

sed -i '/spec:/a\ type: LoadBalancer' manifests/grafana-service.yaml

查看修改

diff manifests/grafana-service.yaml.ori manifests/grafana-service.yaml

11a12

> type: LoadBalancer

修改 promethesu-service

cp manifests/prometheus-service.yaml{,.ori}

sed -i '/spec:/a\ type: LoadBalancer' manifests/prometheus-service.yaml

查看修改

diff manifests/prometheus-service.yaml.ori manifests/prometheus-service.yaml

12a13

> type: LoadBalancer

修改镜像地址

如果访问不了 gcr.io 执行下面的替换为 dockerhub 的

sed -i '/image:/s@k8s.gcr.io/kube-state-metrics@willdockerhub@' $(grep -l image: manifests/*.yaml)

部署 CRD

kubectl create -f manifests/setup

使用 apply 时提示 annotations 过长,使用 create 代替

The CustomResourceDefinition "prometheuses.monitoring.coreos.com" is invalid: metadata.annotations: Too long: must have at most 262144 bytes

输出如下

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

部署 kube-prometheus

kubectl apply -f manifests

输出如下

alertmanager.monitoring.coreos.com/main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io configured

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader configured

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

检查部署情况

kubectl get po -n monitoring

输出如下

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 3m

alertmanager-main-1 2/2 Running 0 3m

alertmanager-main-2 2/2 Running 0 2m59s

blackbox-exporter-6798fb5bb4-dmjxs 3/3 Running 0 3m27s

grafana-78d8cfccff-snlqn 1/1 Running 0 3m22s

kube-state-metrics-5fcb7d6fcb-d6qdg 3/3 Running 0 3m20s

node-exporter-4qq75 2/2 Running 0 3m18s

node-exporter-c7v7m 2/2 Running 0 3m18s

node-exporter-jzc4t 2/2 Running 0 3m18s

prometheus-adapter-7dc46dd46d-bwsg6 1/1 Running 0 3m10s

prometheus-adapter-7dc46dd46d-mgrvj 1/1 Running 0 3m10s

prometheus-k8s-0 2/2 Running 0 2m57s

prometheus-k8s-1 2/2 Running 0 2m57s

prometheus-operator-7ddc6877d5-znn7h 2/2 Running 0 3m8s

kubectl get svc -n monitoring

输出如下

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.107.184.145 <none> 9093/TCP,8080/TCP 3m52s

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 3m23s

blackbox-exporter ClusterIP 10.98.182.126 <none> 9115/TCP,19115/TCP 3m50s

grafana LoadBalancer 10.111.160.67 192.168.122.194 3000:30991/TCP 3m45s

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 3m43s

node-exporter ClusterIP None <none> 9100/TCP 3m41s

prometheus-adapter ClusterIP 10.108.64.100 <none> 443/TCP 3m34s

prometheus-k8s LoadBalancer 10.102.246.195 192.168.122.195 9090:30280/TCP,8080:30097/TCP 3m38s

prometheus-operated ClusterIP None <none> 9090/TCP 3m20s

prometheus-operator ClusterIP None <none> 8443/TCP 3m32s

打开浏览器,输入:http://192.168.122.194:3000 访问 grafana

默认用户名和密码为:admin,admin

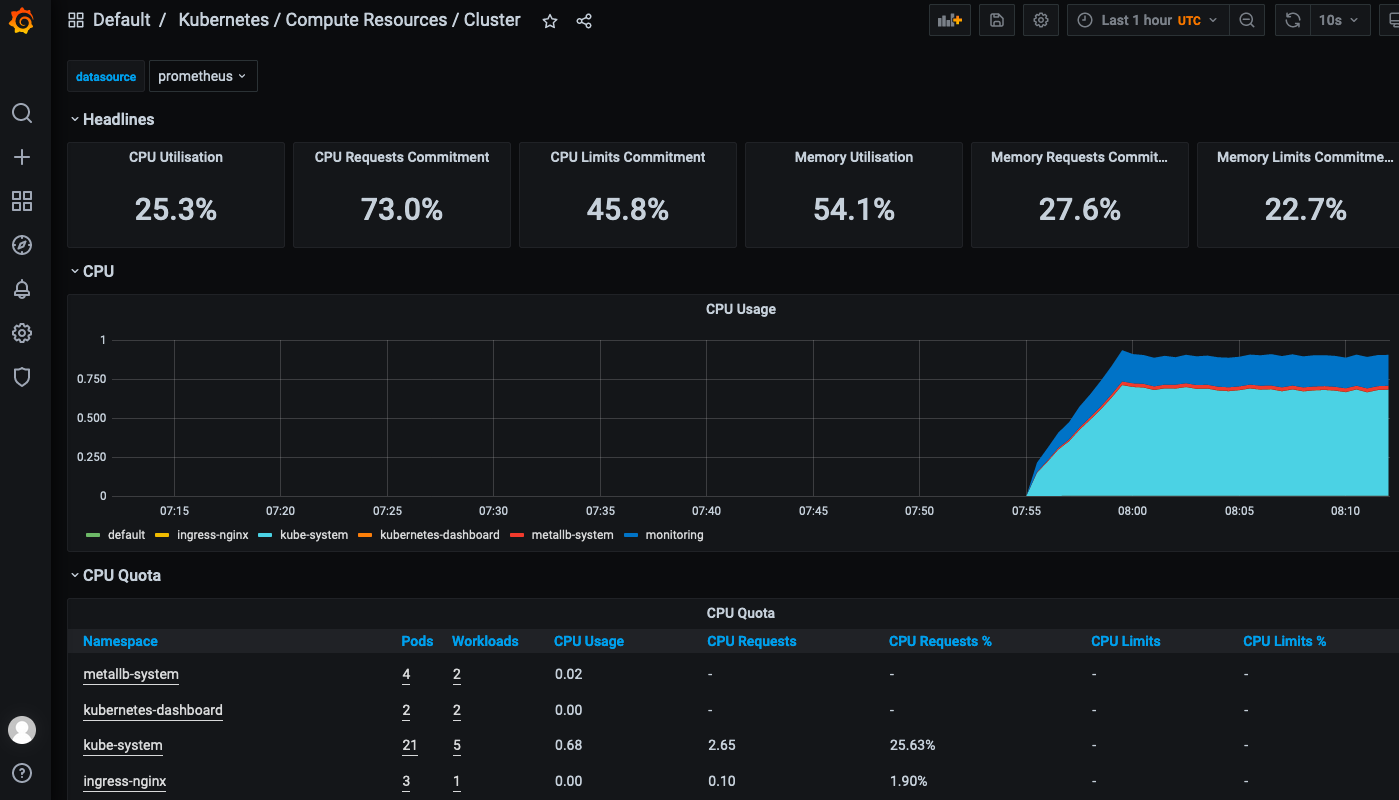

自带的 dashboard,直接可以使用。

具体使用方法略

数据持久化

略