使用kube-prometheus快速部署监控系统

使用kube-prometheus快速部署监控系统

简介

根据官方的描述kube-prometheus集kubernetes资源清单、Grafana仪表盘、Prometheus规则文件以及文档和脚本于一身,通过使用prometheus operator提供易于操作的端到端Kubernetes集群监控。 包含以下组件:

- Prometheus Operator

- 高可用Prometheus

- 高可用AlertManager

- Prometheus node-exporter

- Prometheus Adapter for Kubernetes Metrics APIS

- kube-state-metrics

- Grafana 更多信息可以前往github进行查看:

前提

- 需要有Kubernetes集群环境

1kubectl get nodes

2NAME STATUS ROLES AGE VERSION

3test-node0 Ready <none> 15d v1.18.2

4test-node1 Ready <none> 15d v1.18.2

- 兼容性

| kube-prometheus stack | Kubernetes 1.16 | Kubernetes 1.17 | Kubernetes 1.18 | Kubernetes 1.19 | Kubernetes 1.20 |

|---|---|---|---|---|---|

| release-0.4 | ✔ (v1.16.5+) | ✔ | ✗ | ✗ | ✗ |

| release-0.5 | ✗ | ✗ | ✔ | ✗ | ✗ |

| release-0.6 | ✗ | ✗ | ✔ | ✔ | ✗ |

| release-0.7 | ✗ | ✗ | ✗ | ✔ | ✔ |

| HEAD | ✗ | ✗ | ✗ | ✔ | ✔ |

当前版本1.18.2可以使用release-0.6版本进行部署。

安装

下载代码

1wget https://github.com/prometheus-operator/kube-prometheus/archive/v0.6.0.tar.gz

2tar xf v0.6.0.tar.gz

3cd kube-prometheus-0.6.0

4ls

5DCO README.md examples jsonnet scripts

6LICENSE build.sh experimental jsonnetfile.json sync-to-internal-registry.jsonnet

7Makefile code-of-conduct.md go.mod jsonnetfile.lock.json test.sh

8NOTICE docs go.sum kustomization.yaml tests

9OWNERS example.jsonnet hack manifests

快速安装

默认情况下所有资源创建在monitoring名称空间下

1kubectl create -f manifests/setup

2until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo ""; done

3kubectl create -f manifests/

国内由于某些原因部分源下载比较慢,可能导致镜像拉取失败。检查pod运行状态

1kubectl get pods -n monitoring

2NAME READY STATUS RESTARTS AGE

3alertmanager-main-0 2/2 Running 0 103m

4alertmanager-main-1 2/2 Running 0 103m

5alertmanager-main-2 2/2 Running 0 103m

6grafana-67dfc5f687-w27mv 1/1 Running 0 118m

7kube-state-metrics-69d4c7c69d-q5j8p 3/3 Running 0 118m

8node-exporter-mbt65 2/2 Running 0 118m

9node-exporter-stjfh 2/2 Running 0 118m

10prometheus-adapter-66b855f564-xf98f 1/1 Running 0 118m

11prometheus-k8s-0 3/3 Running 1 103m

12prometheus-k8s-1 3/3 Running 0 103m

13prometheus-operator-57859b8b59-xc6z7 2/2 Running 0 118m

检查服务

1kubectl get svc -n monitoring

2NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

3alertmanager-main ClusterIP 10.96.200.17 <none> 9093/TCP 120m

4alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 105m

5grafana ClusterIP 10.96.185.117 <none> 3000/TCP 120m

6kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 120m

7node-exporter ClusterIP None <none> 9100/TCP 120m

8prometheus-adapter ClusterIP 10.96.154.67 <none> 443/TCP 120m

9prometheus-k8s ClusterIP 10.96.211.235 <none> 9090/TCP 120m

10prometheus-operated ClusterIP None <none> 9090/TCP 105m

11prometheus-operator ClusterIP None <none> 8443/TCP 120m

部署nginx

1kubectl create deploy nginx --image=nginx:1.6.1-alpine

2kubectl get pods

3NAME READY STATUS RESTARTS AGE

4nginx-f4c7fc54d-5qmpq 1/1 Running 0 23s

配置nginx-exporter

要启用nginx-exporter需要开始nginx stub页面统计功能,并将nginx配置以configmap挂载进去

1cat nginx_stub.conf

2server {

3 listen 8888;

4 server_name _;

5 location /stub_status {

6 stub_status on;

7 access_log off;

8 }

9}

1kubectl create configmap nginx-stub --from-file=nginx_stub.conf

挂载配置文件

nginx.yaml

1---

2apiVersion: apps/v1

3kind: Deployment

4metadata:

5 name: nginx

6spec:

7 selector:

8 matchLabels:

9 app: nginx

10 replicas: 1

11 template:

12 metadata:

13 labels:

14 app: nginx

15 spec:

16 containers:

17 - name: nginx

18 image: nginx:1.16.1-alpine

19 volumeMounts:

20 - mountPath: /etc/nginx/conf.d/nginx_stub.conf

21 subPath: nginx_stub.conf

22 name: nginx-conf

23 volumes:

24 - name: nginx-conf

25 configMap:

26 name: nginx-stub

27---

28apiVersion: v1

29kind: Service

30metadata:

31 name: nginx

32 labels:

33 app: nginx

34spec:

35 selector:

36 app: nginx

37 ports:

38 - port: 80

39 targetPort: 80

40 name: http

41 - port: 8888

42 targetPort: 8888

43 name: nginx-stub

应用

1kubectl apply -f nginx.yaml

创建exporter

1---

2apiVersion: apps/v1

3kind: Deployment

4metadata:

5 name: nginx-exporter

6 labels:

7 app: nginx-exporter

8spec:

9 strategy:

10 rollingUpdate:

11 maxSurge: 1

12 maxUnavailable: 1

13 type: RollingUpdate

14 selector:

15 matchLabels:

16 app: nginx-exporter

17 template:

18 metadata:

19 labels:

20 app: nginx-exporter

21 spec:

22 containers:

23 - image: nginx/nginx-prometheus-exporter:0.8.0

24 name: nginx-exporter

25 imagePullPolicy: Always

26 args:

27 - "-nginx.scrape-uri=http://nginx:8888/stub_status" # 需要和前面配置的stub页面保持一致

28 resources:

29 requests:

30 cpu: 50m

31 memory: 64Mi

32 limits:

33 cpu: 50m

34 memory: 64Mi

35 ports:

36 - containerPort: 9113

37 name: http-metrics

38 volumeMounts:

39 - mountPath: /etc/localtime

40 name: timezone

41 volumes:

42 - name: timezone

43 hostPath:

44 path: /etc/localtime

45 restartPolicy: Always

46---

47apiVersion: v1

48kind: Service

49metadata:

50 name: nginx-exporter

51 labels:

52 app: nginx-exporter

53spec:

54 selector:

55 app: nginx-exporter

56 ports:

57 - port: 9113

58 targetPort: 9113

59 name: metrics

1

创建serviceMonitor

nginx-serviceMonitor.yaml

1apiVersion: monitoring.coreos.com/v1

2kind: ServiceMonitor

3metadata:

4 name: nginx-exporter

5spec:

6 endpoints:

7 - interval: 15s

8 port: metrics # 需要和service的port名称保持一致

9 selector:

10 matchLabels:

11 app: nginx-exporter

创建

1kubectl apply -f nginx-serviceMonitor.yaml

使用

对外暴露端口

默认安装后prometheus和grafana都是使用ClusterIP的形式,可以采用LoadBalancer或者ingress等形式对外提供服务。这里我们简单的使用NodePort进行暴露。

1kubectl -n monitoring patch svc grafana -p '{"spec":{"type": "NodePort"}}'

2kubectl -n monitoring patch svc prometheus-k8s -p '{"spec":{"type": "NodePort"}}'

3kubectl get svc -n monitoring | grep -E "grafana|prometheus-k8s"

4grafana NodePort 10.96.185.117 <none> 3000:32072/TCP 127m

5prometheus-k8s NodePort 10.96.211.235 <none> 9090:30787/TCP 127m

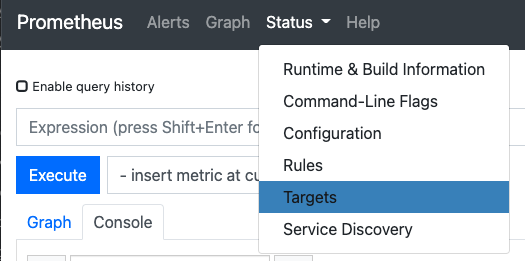

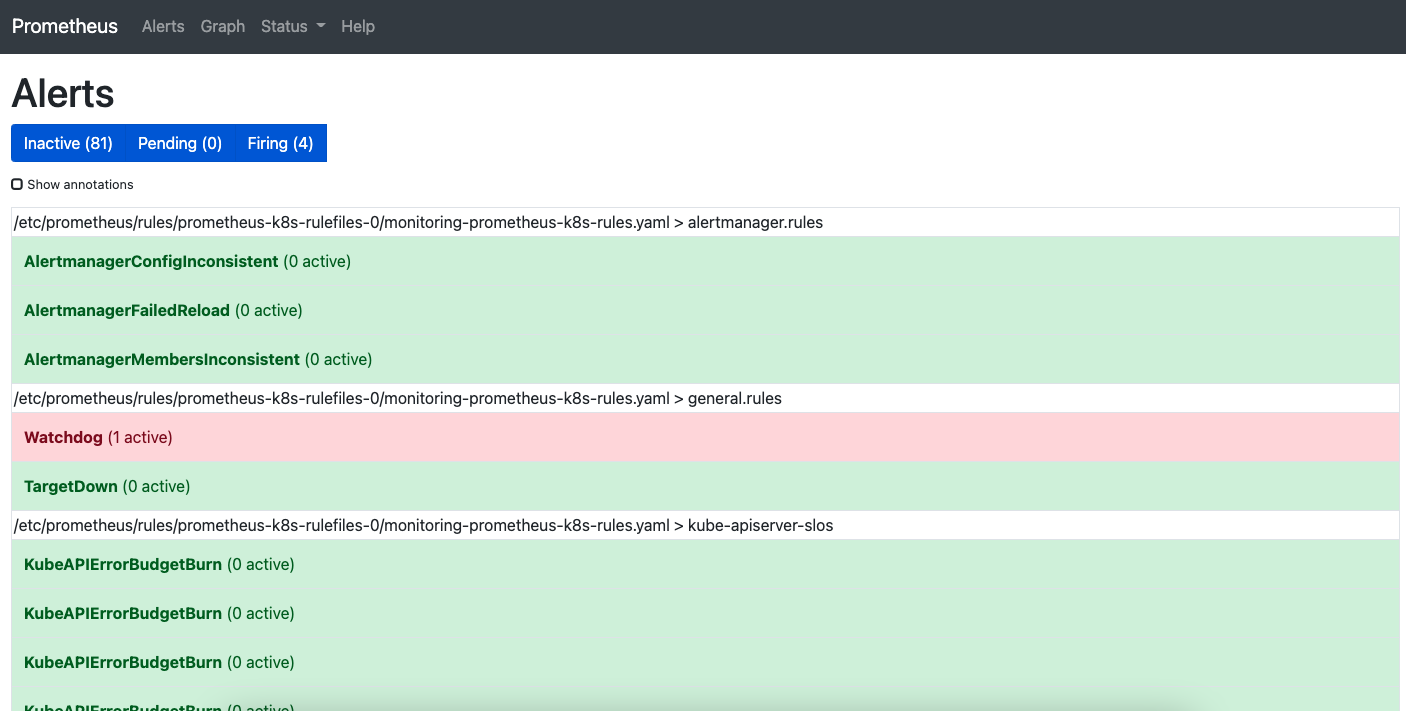

查看Prometheus

打开浏览器访问node节点的30787端口进行访问。

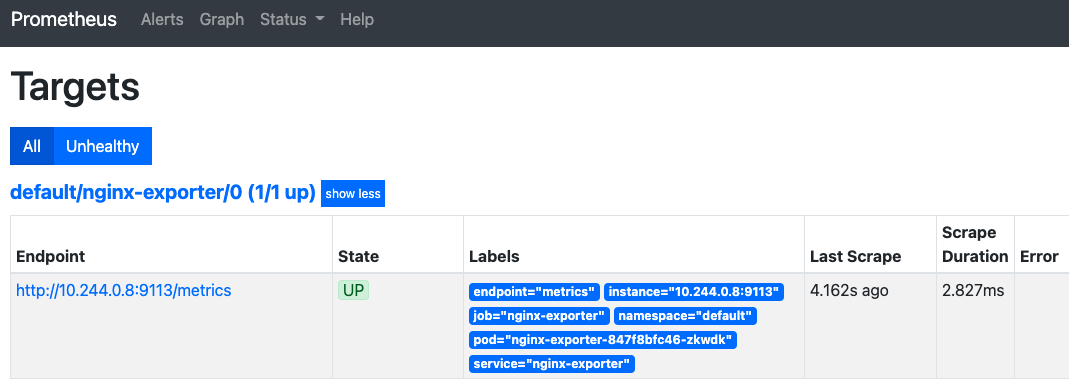

查看target

可以看到我们创建的nginx的target已经采集到了。

配置grafana

打开浏览器,访问node的32072

默认用户名admin,密码admin

首次登录提示修改密码。

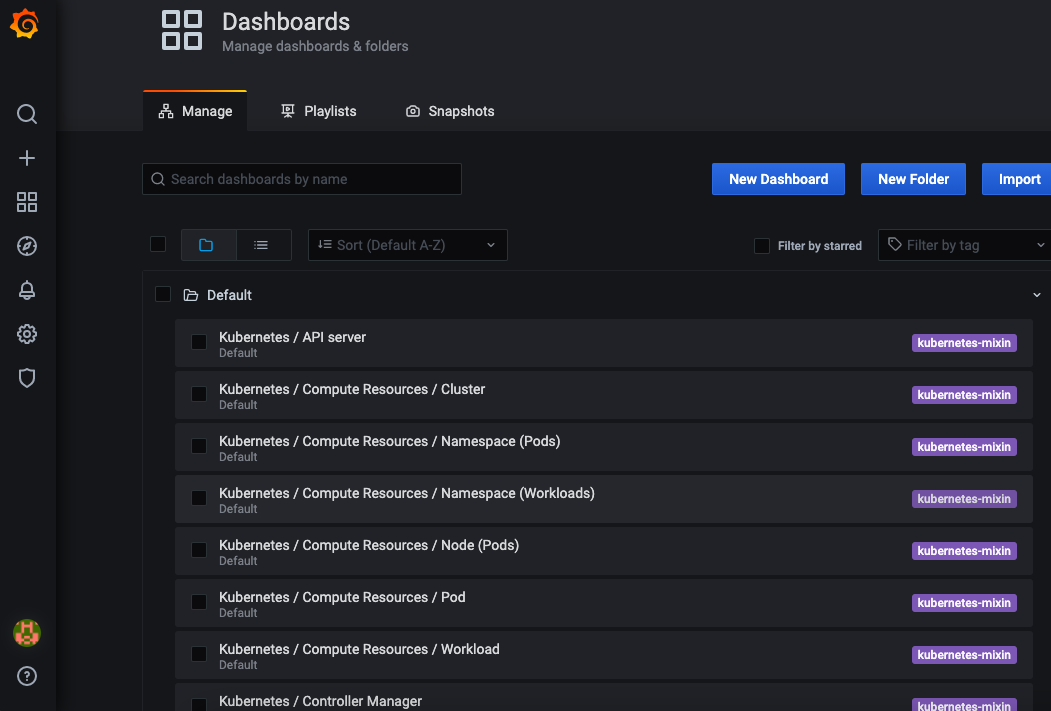

查看Dashboard

默认内置的部分dashboards

用户可以从Grafana的官网下载相应的Dashboard,也可以自己创建Dashboard。

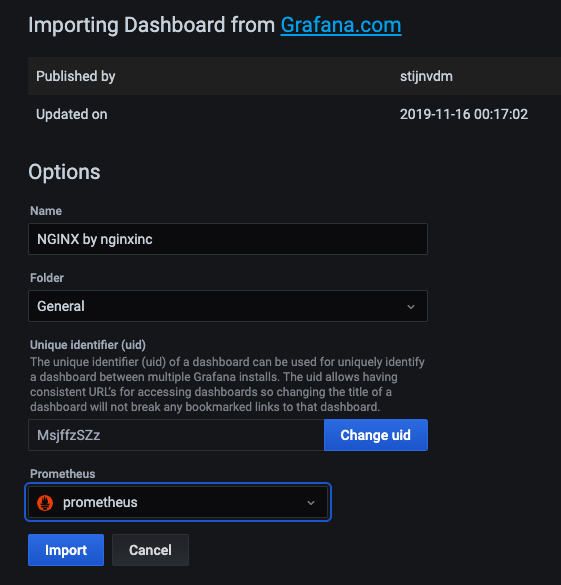

导入Dashboard

可以看到nginx的运行状况,活跃连接数,请求数等信息。

配置告警

默认情况下kube-prometheus已经内置了一系列的告警规则在里面,但是收不到告警信息的。

alertmanager-secret.yaml

1apiVersion: v1

2data: {}

3kind: Secret

4metadata:

5 name: alertmanager-main

6 namespace: monitoring

7stringData:

8 alertmanager.yaml: |-

9 "global":

10 "resolve_timeout": "5m"

11 "inhibit_rules":

12 - "equal":

13 - "namespace"

14 - "alertname"

15 "source_match":

16 "severity": "critical"

17 "target_match_re":

18 "severity": "warning|info"

19 - "equal":

20 - "namespace"

21 - "alertname"

22 "source_match":

23 "severity": "warning"

24 "target_match_re":

25 "severity": "info"

26 "receivers":

27 - "name": "Default"

28 - "name": "Watchdog"

29 - "name": "Critical"

30 "route":

31 "group_by":

32 - "namespace"

33 "group_interval": "5m"

34 "group_wait": "30s"

35 "receiver": "Default"

36 "repeat_interval": "12h"

37 "routes":

38 - "match":

39 "alertname": "Watchdog"

40 "receiver": "Watchdog"

41 - "match":

42 "severity": "critical"

43 "receiver": "Critical"

44type: Opaque

默认情况下时没有配置邮件或者其他告警渠道的。

通过获取官方文档找到相应的配置信息。https://prometheus.io/docs/alerting/latest/configuration/

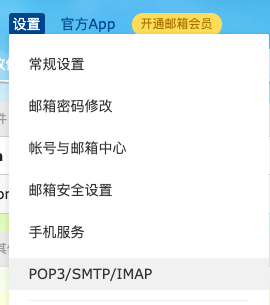

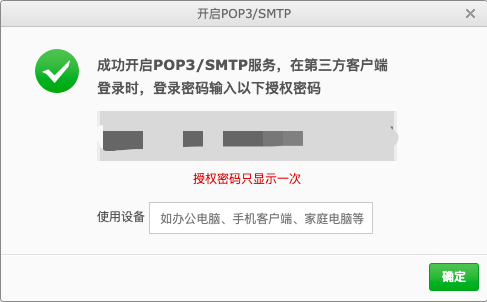

以163邮箱为例,先登录邮箱,开启SMTP功能。

修改配置信息如下

1apiVersion: v1

2data: {}

3kind: Secret

4metadata:

5 name: alertmanager-main

6 namespace: monitoring

7stringData:

8 alertmanager.yaml: |-

9 "global":

10 # 163SMTP邮件服务器

11 "smtp_smarthost": "smtp.163.com:25"

12 # 发邮件的邮箱

13 "smtp_from": "XXXXXXXX@163.com"

14 # 发邮件的邮箱用户名,也就是你的邮箱

15 "smtp_auth_username": "XXXXXX@163.com"

16 # 前面获取的授权密码,记住不是邮箱密码

17 "smtp_auth_password": "XXXXXXXXXXXXXXXX"

18 "resolve_timeout": "5m"

19 "inhibit_rules":

20 - "equal":

21 - "namespace"

22 - "alertname"

23 "source_match":

24 "severity": "critical"

25 "target_match_re":

26 "severity": "warning|info"

27 - "equal":

28 - "namespace"

29 - "alertname"

30 "source_match":

31 "severity": "warning"

32 "target_match_re":

33 "severity": "info"

34 "receivers":

35 - "name": "Email"

36 "email_configs":

37 # 接收邮件的邮箱,多个邮箱中间用空格隔开,也可以写多个to;尽量不要和发件一样,否则可能会遇到各种问题

38 - "to": "hzde@qq.com"

39 "route":

40 "group_by":

41 - "namespace"

42 - "alertname"

43 "group_interval": "5m"

44 "group_wait": "30s"

45 # 接收器

46 "receiver": "Email"

47 # 收敛时间,告警未恢复再次告警时间间隔

48 "repeat_interval": "1h"

49 "routes":

50 - "match":

51 "severity": "critical"

52 "receiver": "Email"

53type: Opaque

修改了配置文件,需要等待alertmanager重新加载。可以把alertmanager的service也改成NodePort进行查看。

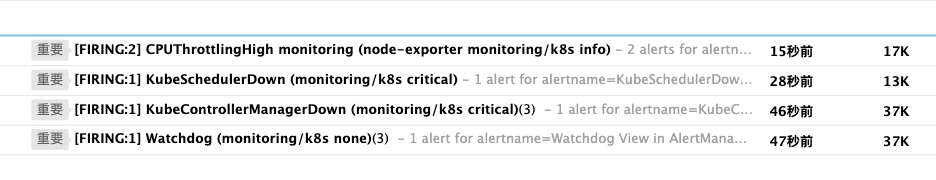

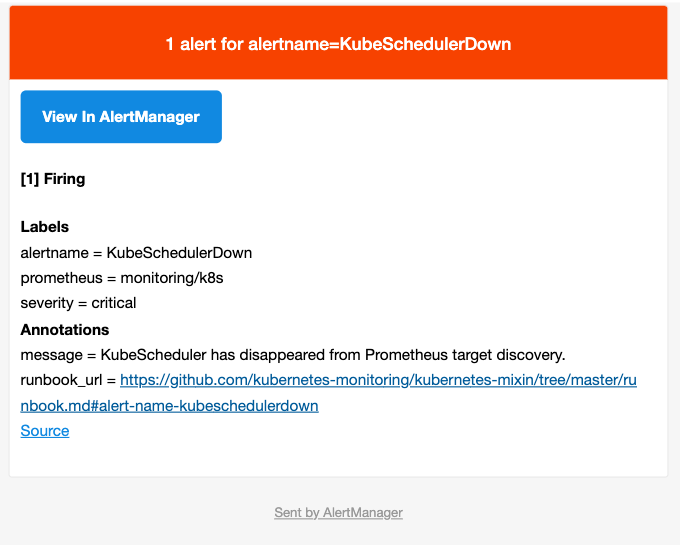

邮件告警配置生效后就能收到邮件告警了。

以下为具体的告警内容:

自定义告警规则

https://awesome-prometheus-alerts.grep.to/rules提供了许多规则,可以根据自己的实际需求参考配置,将自定义的规则放到一个单独的configmap里面,再进行挂载。

高级功能

数据持久化

alertmanager、prometheus、grafana属于有状态的服务,数据应该采用持久化存储,后端可以是glusterfs、ceph等等。

前提

需要已经安装了存储类

1kubectl get sc

2NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

3ceph-rbd (default) ceph.com/rbd Retain Immediate false 3h

prometheus数据持久化

修改prometheus-prometheus.yaml文件,在末尾加上

1 # 这部分为持久化配置

2 storage:

3 volumeClaimTemplate:

4 spec:

5 storageClassName: ceph-rbd # 存储类的名称

6 accessModes: ["ReadWriteOnce"]

7 resources:

8 requests:

9 storage: 100Gi

然后重新创建

1kubectl apply -f prometheus-prometheus.yaml

Grafana数据持久化

先为grafana创建pvc

1apiVersion: v1

2kind: PersistentVolumeClaim

3metadata:

4 name: grafana-pvc

5 namespace: monitoring

6spec:

7 accessModes:

8 - ReadWriteOnce

9 resources:

10 requests:

11 storage: 10Gi

12 storageClassName: ceph-rbd # 对应存储类的名称

修改grafana-deployment.yaml文件,挂载pv

1 volumes: 找到grafana-storage段,将之前的emptyDir修改为pvc

2 #- emptyDir: {}

3 # name: grafana-storage

4 - name: grafana-storage

5 persistentVolumeClaim:

6 claimName: grafana-pvc # 与上面创建的pvc名称保持一致

修改prometheus默认保存时长

promentheus默认保留15天的数据,可以根据自己的需要进行调整。例如将prometheus数据保存为30天。

修改setup/prometheus-operator-deployment.yaml文件

1 - args:

2 - --kubelet-service=kube-system/kubelet

3 - --logtostderr=true

4 - --config-reloader-image=jimmidyson/configmap-reload:v0.3.0

5 - --prometheus-config-reloader=quay.io/coreos/prometheus-config-reloader:v0.40.0

6 - --storage.tsdb.retention.time=30d # 在这添加time参数

参数说明

1--storage.tsdb.retention.time=STORAGE.TSDB.RETENTION.TIME

2 How long to retain samples in storage. When this flag is set it overrides "storage.tsdb.retention". If neither this flag nor "storage.tsdb.retention" nor "storage.tsdb.retention.size" is set, the retention time defaults to 15d. Units Supported: y, w, d, h, m, s, ms.

服务自动发现

前面配置了一个nginx-exporter示例,是通过serviceMonitor的方式获取到target的。我们可以使用prometheus提供的kubernetes_sd_config能力,对service或者pod进行自动发现。

Kubernetes_sd_config支持的服务发现级别有

- node

- service

- pod

- endpoints

- ingress

这里介绍一下endpoints的自动发现功能。其它发现方式可以参考官方文档kubernetes_sd_config章节。

创建一个prometheus-additional.yaml文件

1- job_name: 'kubernetes-service-endpoints'

2 kubernetes_sd_configs:

3 - role: endpoints

4 relabel_configs:

5 - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

6 action: keep

7 regex: true

8 - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

9 action: replace

10 target_label: __scheme__

11 regex: (https?)

12 - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

13 action: replace

14 target_label: __metrics_path__

15 regex: (.+)

16 - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

17 action: replace

18 target_label: __address__

19 regex: ([^:]+)(?::\d+)?;(\d+)

20 replacement: $1:$2

21 - action: labelmap

22 regex: __meta_kubernetes_service_label_(.+)

23 - source_labels: [__meta_kubernetes_namespace]

24 action: replace

25 target_label: kubernetes_namespace

26 - source_labels: [__meta_kubernetes_service_name]

27 action: replace

28 target_label: kubernetes_name

将文件创建为secret

1kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring

修改prometheus-prometheus.yaml文件,将该secret挂载上

1 # 在spec下面填写如下内容

2 additionalScrapeConfigs:

3 name: additional-configs

4 key: prometheus-additional.yaml

然后重新应用,实现挂载

1kubectl apply -f prometheus-prometheus.yaml

然后可以查看service discovery中的kubernetes-service-endpoints,前面创建的nginx-exporter的serviceMonitor也可以删除了。

- 原文作者:黄忠德

- 原文链接:https://huangzhongde.cn/post/Kubernetes/Using_kube-prometheus_deploy_monitor_system/

- 版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议进行许可,非商业转载请注明出处(作者,原文链接),商业转载请联系作者获得授权。