使用HAProxy+Pacemaker部署RabbitMQ高可用集群

环境准备

| 节点 | IP | VIP | 角色 |

|---|---|---|---|

| rabbit1 | 172.16.10.11 | RabbitMQ | |

| rabbit2 | 172.16.10.12 | RabbitMQ | |

| rabbit3 | 172.16.10.13 | RabbitMQ | |

| lvs1 | 172.16.10.14 | 172.16.10.10 | HAProxy,Pacemaker |

| lvs2 | 172.16.10.15 | HAProxy,Pacemaker |

安装Rabbit MQ

简介

集群中的节点有两种,一种是内存节点,一种是磁盘节点;

内存节点由于没有磁盘读写,性能比磁盘节点要好,磁盘节点可以将状态持久化到磁盘,可用性比内存节点要好,需要权衡考虑。

配置hosts

1cat > /etc/hosts <<EOF

2127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

3::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

4172.16.10.11 rabbit1

5172.16.10.12 rabbit2

6172.16.10.13 rabbit3

7172.16.10.14 lvs1

8172.16.10.15 lvs2

9EOF

关闭防火墙

1# 临时关闭selinux

2setenforce 0

3

4# 永久关闭selinux

5sed -i '/^SELINUX/s#enforcing#disabled#' /etc/selinux/config

6

7# 停止并关闭firewalld

8systemctl disable --now firewalld

修改时区并配置时间同步

集群内部时间同步非常重要。

1timedatectl set-timezone Asia/Shanghai

2

3yum -y install chrony

4systemctl enable --now chronyd

配置rabbitmq源

1cat > /etc/yum.repos.d/rabbitmq-3.8.repo <<EOF

2[bintray-rabbitmq-server]

3name=bintray-rabbitmq-rpm

4baseurl=https://dl.bintray.com/rabbitmq/rpm/rabbitmq-server/v3.8.x/el/7/

5gpgcheck=0

6repo_gpgcheck=0

7enabled=1

8EOF

安装erlang

Erlang版本要求:最小版本21.3,最大版本22.x,推荐版本22.x。

1wget https://packages.erlang-solutions.com/erlang/rpm/centos/7/x86_64/esl-erlang_23.0.2-1~centos~7_amd64.rpm

2rpm -ivh esl-erlang_23.0.2-1~centos~7_amd64.rpm

安装rabbitmq-server

1yum -y install rabbitmq-server

运行

1systemctl enable --now rabbitmq-server

配置集群

拷贝cookie到其他节点

同步cookie文件

Erlang 节点间通过认证 Erlang cookie 的方式允许互相通信。因为 rabbitmqctl 使用 Erlang OTP 通信机制来和 Rabbit 节点通信,运行 rabbitmqctl 的机器和所要连接的 Rabbit 节点必须使用相同的 Erlang cookie 。否则你会得到一个错误。

1# 共享.erlang.cookie

2scp /var/lib/rabbitmq/.erlang.cookie rabbit2:/var/lib/rabbitmq/

3scp /var/lib/rabbitmq/.erlang.cookie rabbit3:/var/lib/rabbitmq/

在rabbit2,rabbit3启动rabbitmq-server

1# 运行服务

2systemctl enable --now rabbitmq-server

3

4# 停止app

5rabbitmqctl stop_app

6

7# 加入集群

8rabbitmqctl join_cluster rabbit@rabbit1

9

10# 启动app

11rabbitmqctl start_app

查看集群状态

1rabbitmqctl cluster_status

2Cluster status of node rabbit@rabbit1 ...

3Basics

4

5Cluster name: rabbit@rabbit1

6

7Disk Nodes

8

9rabbit@rabbit1

10rabbit@rabbit2

11rabbit@rabbit3

12

13Running Nodes

14

15rabbit@rabbit1

16rabbit@rabbit2

17rabbit@rabbit3

18

19Versions

20

21rabbit@rabbit1: RabbitMQ 3.8.4 on Erlang 23.0.2

22rabbit@rabbit2: RabbitMQ 3.8.4 on Erlang 23.0.2

23rabbit@rabbit3: RabbitMQ 3.8.4 on Erlang 23.0.2

24

25Alarms

26

27(none)

28

29Network Partitions

30

31(none)

32

33Listeners

34

35Node: rabbit@rabbit1, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

36Node: rabbit@rabbit1, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

37Node: rabbit@rabbit2, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

38Node: rabbit@rabbit2, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

39Node: rabbit@rabbit3, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

40Node: rabbit@rabbit3, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

41

42Feature flags

43

44Flag: implicit_default_bindings, state: enabled

45Flag: quorum_queue, state: enabled

46Flag: virtual_host_metadata, state: enabled

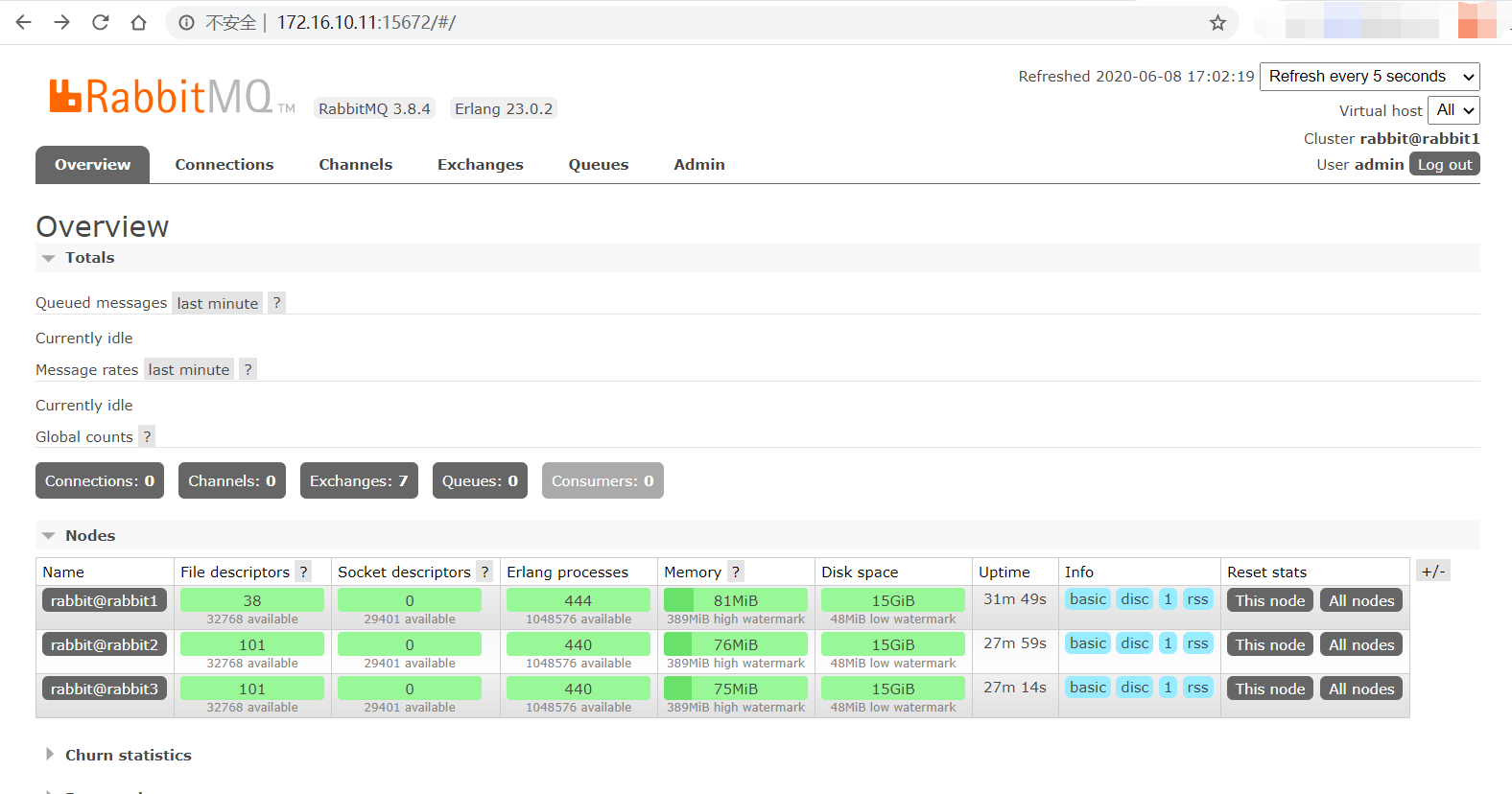

看到3个节点已经在同一个集群中了。

配置队列镜像

在任意一个节点上执行

1rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

2Setting policy "ha-all" for pattern "^" to "{"ha-mode":"all"}" with priority "0" for vhost "/" ...

创建用户并开启web ui

1# 查看所有的插件

2rabbitmq-plugins list

3Listing plugins with pattern ".*" ...

4 Configured: E = explicitly enabled; e = implicitly enabled

5 | Status: * = running on rabbit@rabbit1

6 |/

7[ ] rabbitmq_amqp1_0 3.8.4

8[ ] rabbitmq_auth_backend_cache 3.8.4

9[ ] rabbitmq_auth_backend_http 3.8.4

10[ ] rabbitmq_auth_backend_ldap 3.8.4

11[ ] rabbitmq_auth_backend_oauth2 3.8.4

12[ ] rabbitmq_auth_mechanism_ssl 3.8.4

13[ ] rabbitmq_consistent_hash_exchange 3.8.4

14[ ] rabbitmq_event_exchange 3.8.4

15[ ] rabbitmq_federation 3.8.4

16[ ] rabbitmq_federation_management 3.8.4

17[ ] rabbitmq_jms_topic_exchange 3.8.4

18[ ] rabbitmq_management 3.8.4

19[ ] rabbitmq_management_agent 3.8.4

20[ ] rabbitmq_mqtt 3.8.4

21[ ] rabbitmq_peer_discovery_aws 3.8.4

22[ ] rabbitmq_peer_discovery_common 3.8.4

23[ ] rabbitmq_peer_discovery_consul 3.8.4

24[ ] rabbitmq_peer_discovery_etcd 3.8.4

25[ ] rabbitmq_peer_discovery_k8s 3.8.4

26[ ] rabbitmq_prometheus 3.8.4

27[ ] rabbitmq_random_exchange 3.8.4

28[ ] rabbitmq_recent_history_exchange 3.8.4

29[ ] rabbitmq_sharding 3.8.4

30[ ] rabbitmq_shovel 3.8.4

31[ ] rabbitmq_shovel_management 3.8.4

32[ ] rabbitmq_stomp 3.8.4

33[ ] rabbitmq_top 3.8.4

34[ ] rabbitmq_tracing 3.8.4

35[ ] rabbitmq_trust_store 3.8.4

36[ ] rabbitmq_web_dispatch 3.8.4

37[ ] rabbitmq_web_mqtt 3.8.4

38[ ] rabbitmq_web_mqtt_examples 3.8.4

39[ ] rabbitmq_web_stomp 3.8.4

40[ ] rabbitmq_web_stomp_examples 3.8.4

41

42# 启用插件

43# 插件只在当前机器生效,因此需要在3台服务器上都安装

44rabbitmq-plugins enable rabbitmq_management

45Enabling plugins on node rabbit@rabbit1:

46rabbitmq_management

47The following plugins have been configured:

48 rabbitmq_management

49 rabbitmq_management_agent

50 rabbitmq_web_dispatch

51Applying plugin configuration to rabbit@rabbit1...

52The following plugins have been enabled:

53 rabbitmq_management

54 rabbitmq_management_agent

55 rabbitmq_web_dispatch

56

57started 3 plugins.

创建用户并授权

1# 创建用户

2rabbitmqctl add_user admin admin

3Adding user "admin" ...

4

5# 授予权限

6# 授予管理员权限

7rabbitmqctl set_user_tags admin administrator

8Setting tags for user "admin" to [administrator] ...

9

10# 授予admin用户可访问所有虚拟主机,并在所有的资源上具备可配置、可写及可读的权限

11rabbitmqctl set_permissions -p / admin ".*" ".*" ".*"

12Setting permissions for user "admin" in vhost "/" ...

Web访问

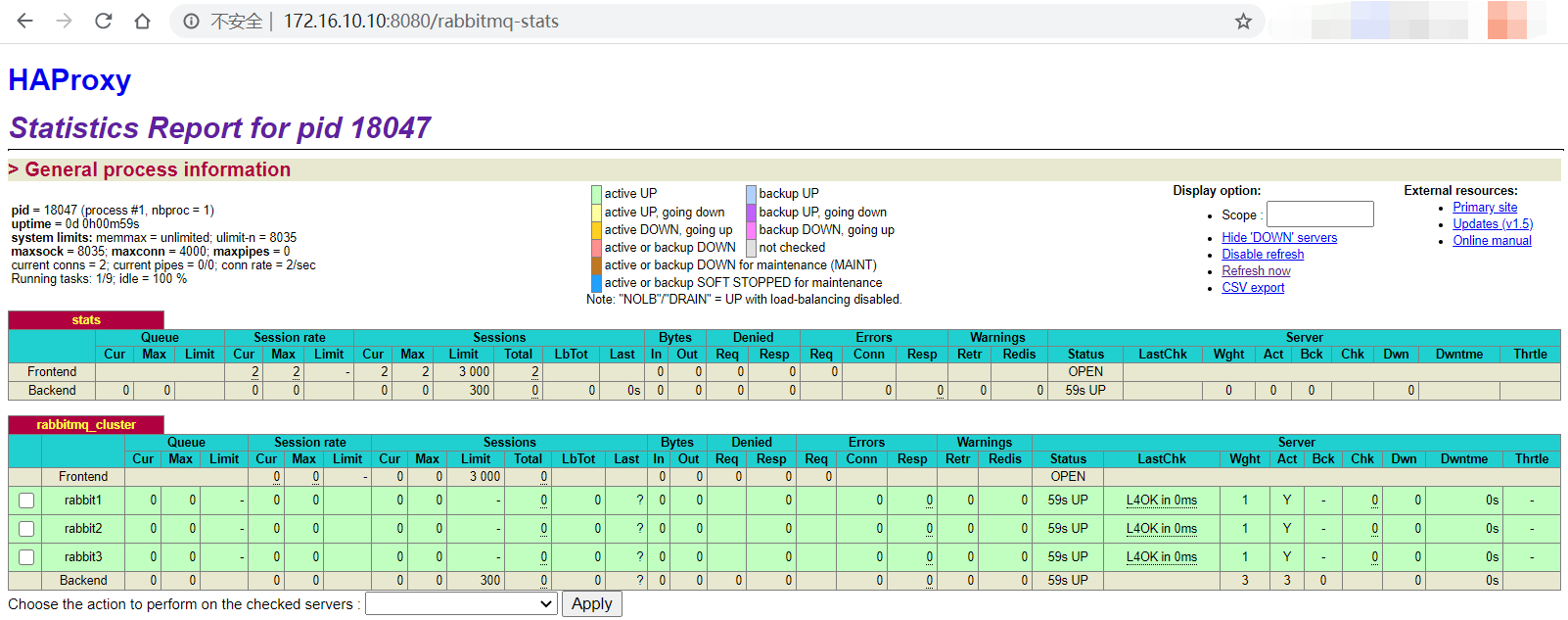

HAProxy

安装HAProxy

1yum -y install haproxy

配置HAProxy

1cat > /etc/haproxy/haproxy.cfg <<EOF

2global

3 log 127.0.0.1 local2 # 日志输出配置,所有日志都记录在本机,通过local2输出

4

5 chroot /var/lib/haproxy

6 pidfile /var/run/haproxy.pid

7 maxconn 4000 # 默认最大连接数,需考虑ulimit-n限制

8 user haproxy

9 group haproxy

10 daemon # 以后台形式运行harpoxy

11

12 stats socket /var/lib/haproxy/stats

13

14defaults

15 mode tcp # 默认的模式mode {tcp|http|health},tcp是4层,http是7层,health只会返回OK

16 log global

17 option tcplog # 日志类别,采用tcplog

18 option dontlognull # 不记录健康检查日志信息

19 option redispatch

20 retries 3 # 三次连接失败就认为是服务器不可用,也可以通过后面设置

21 timeout http-request 10s

22 timeout queue 1m

23 timeout connect 10s # 连接超时

24 timeout client 1m # 客户端超时

25 timeout server 1m # 服务器超时

26 timeout http-keep-alive 10s

27 timeout check 10s

28 maxconn 3000 # 默认的最大连接数

29

30listen stats

31 bind *:8080

32 mode http

33 option httplog

34 stats enable

35 stats hide-version

36 stats uri /rabbitmq-stats

37 stats admin if TRUE

38 stats auth admin:admin

39 stats refresh 10s 10000

40

41listen rabbitmq_cluster

42 bind *:5672 # 设置Frontend和Backend的组合体,这里建议使用bind *:5672的方式

43 mode tcp

44 balance roundrobin

45 server rabbit1 172.16.10.11:5672 check inter 5000 rise 2 fall 2

46 server rabbit2 172.16.10.12:5672 check inter 5000 rise 2 fall 2

47 server rabbit3 172.16.10.13:5672 check inter 5000 rise 2 fall 2

48EOF

Pacemaker

安装Pacemaker

1yum -y install pcs pacemaker corosync

运行Pacemaker

1systemctl enable --now pcsd

配置Pacemaker集群

为hacluster设置密码

1id hacluster

2uid=189(hacluster) gid=189(haclient) groups=189(haclient)

3

4passwd hacluster

5Changing password for user hacluster.

6New password:

7BAD PASSWORD: The password contains the user name in some form

8Retype new password:

9passwd: all authentication tokens updated successfully.

创建Pacemaker集群

在lvs的其中一个节点执行就行

1pcs cluster auth lvs1 lvs2

2Username: hacluster

3Password: # 输入之前创建的密码

4lvs1: Authorized

5lvs2: Authorized

6

7pcs cluster setup --name pcs_cluster lvs1 lvs2

8Destroying cluster on nodes: lvs1, lvs2...

9lvs1: Stopping Cluster (pacemaker)...

10lvs2: Stopping Cluster (pacemaker)...

11lvs2: Successfully destroyed cluster

12lvs1: Successfully destroyed cluster

13

14Sending 'pacemaker_remote authkey' to 'lvs1', 'lvs2'

15lvs1: successful distribution of the file 'pacemaker_remote authkey'

16lvs2: successful distribution of the file 'pacemaker_remote authkey'

17Sending cluster config files to the nodes...

18lvs1: Succeeded

19lvs2: Succeeded

20

21Synchronizing pcsd certificates on nodes lvs1, lvs2...

22lvs2: Success

23lvs1: Success

24Restarting pcsd on the nodes in order to reload the certificates...

25lvs2: Success

26lvs1: Success

27

28# 设置集群所有服务器开启

29pcs cluster start --all

30lvs1: Starting Cluster (corosync)...

31lvs2: Starting Cluster (corosync)...

32lvs2: Starting Cluster (pacemaker)...

33lvs1: Starting Cluster (pacemaker)...

34

35# 设置集群中所有服务器自动开启

36pcs cluster enable --all

37lvs1: Cluster Enabled

38lvs2: Cluster Enabled

39

40# 查看pcs状态

41pcs status

42Cluster name: pcs_cluster

43

44WARNINGS:

45No stonith devices and stonith-enabled is not false

46

47Stack: corosync

48Current DC: lvs1 (version 1.1.21-4.el7-f14e36fd43) - partition with quorum

49Last updated: Mon Jun 8 10:53:58 2020

50Last change: Mon Jun 8 10:53:46 2020 by hacluster via crmd on lvs1

51

522 nodes configured

530 resources configured

54

55Online: [ lvs1 lvs2 ]

56

57No resources

58

59

60Daemon Status:

61 corosync: active/enabled

62 pacemaker: active/enabled

63 pcsd: active/enabled

禁用stonith警告

1pcs property set stonith-enabled=false

忽略2节点的quorum功能

1pcs property set no-quorum-policy=ignore

创建Pacemaker集群HAProxy集群资源

1pcs resource create lb-haproxy systemd:haproxy --clone

创建Pacemaker集群虚拟IP资源

1pcs resource create vip-rabbitmq IPaddr2 ip=172.16.10.10 \

2 cidr_netmask=32 nic=ens33 op monitor interval=30s

3Assumed agent name 'ocf:heartbeat:IPaddr2' (deduced from 'IPaddr2')

4

5pcs constraint order start vip-rabbitmq then lb-haproxy-clone kind=Optional

6Adding vip-mariadb lb-haproxy-clone (kind: Optional) (Options: first-action=start then-action=start)

7

8pcs constraint colocation add vip-rabbitmq with lb-haproxy-clone

检查状态

1pcs status

2Cluster name: pcs_cluster

3Stack: corosync

4Current DC: lvs1 (version 1.1.21-4.el7-f14e36fd43) - partition with quorum

5Last updated: Mon Jun 8 23:06:12 2020

6Last change: Mon Jun 8 23:06:07 2020 by root via cibadmin on lvs1

7

82 nodes configured

93 resources configured

10

11Online: [ lvs1 lvs2 ]

12

13Full list of resources:

14

15 Clone Set: lb-haproxy-clone [lb-haproxy]

16 Started: [ lvs1 lvs2 ]

17 vip-rabbitmq (ocf::heartbeat:IPaddr2): Started lvs1

18

19Daemon Status:

20 corosync: active/enabled

21 pacemaker: active/enabled

22 pcsd: active/enabled

看到pacemaker集群已经运行起来。

后面业务主要通过VIP跟RabbitMQ进行通信。

- 原文作者:黄忠德

- 原文链接:https://huangzhongde.cn/post/Linux/RabbitMQ_HA_with_haproxy_and_pacemaker/

- 版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议进行许可,非商业转载请注明出处(作者,原文链接),商业转载请联系作者获得授权。